Did your survey site kick you out? Can you smell a scam? We’ve looked at why so many panel members experience suspended survey accounts at the time of redeeming their rewards.

This article is written for informational purposes only. It does not constitute legal advice, and should not be used as such.

Data collection via the internet has revolutionised research methods, but it also poses new dangers for data quality. As an example, market research provider Cint reported a significant negative impact on their results for Q4 2022 due to survey fraud.

This is why survey companies are so focused on strict measures and can be quick to suspend accounts and forfeit rewards when suspecting fraudulent or inattentive behaviour.

On the other hand, many respondents perceive the methods used by survey companies as unfair and unethical lacking transparency. Just have a look at reviews on sites like Trustpilot, and you’ll find thousands of complaints from offended survey-takers who feel their rewards have been robbed by the survey site. Often at the time when they try to redeem after spending hours of time taking surveys. Isn’t that a bit suspicious? The fact is that many survey sites understate the efforts required to earn real rewards from taking surveys.

It all sounds so very easy in the beginning

Until the time comes and you try to get your reward. Many are asking if survey companies deliberately try to cheat people by making it unattainable to redeem rewards.

Is the market research industry engaged in unethical behaviour?

In this post, we’ll try to make sense of it all by taking a detailed look at what types of actions can lead to account suspension, how you can prevent it from happening, and how to complain if you feel unfairly treated by the survey site.

Content

4 common reasons for survey account suspension

Here are 4 ways you’re NOT meant to behave on a survey site. These examples have previously been published by one of the largest survey sites.

1. You’re inattentive when taking surveys

The most common reason for inaccurate survey data is inattentive behaviour. Inattentive means you’re not motivated to give thoughtful answers for the incentives given. Inattentive respondents are a wide-ranging segment of individuals: students who do not understand instructions correctly and those who fill in questionnaires while watching television. They might input random responses as gibberish or even low-effort text. Most importantly, distracted respondents are not always honest. Many may consider the reward insufficient to warrant the time and effort and don’t feel the survey site deserves their full attention and focus.

How survey sites try to stop inattentive respondents

- Providing a time limit to fill out questions to ensure that the participants do not have the time to become distracted by television or the internet.

- Asking participants to clarify the instructions after the survey

- Record page-view and timing data

- Save the page load and the timestamp for every time a question is asked.

- Track the amount of times that the page is either reduced or hidden.

- Track the amount of time spent reading instructions.

- Look at unusual time patterns. What if someone took just 2 seconds to go through the instructions? Did you take 40 minutes to answer the questionnaire with a five-minute gap between questions?

- Attention checks (aka Instructional Manipulation Checks or IMCs).

- Open-ended questions that demand more than one single-word answer.

- Measuring careless responses like consistency indices or response pattern analysis.

Our advice: Check for reliable survey sites on HuginX matching your expectations in terms of topics and rewards. In this way, chances are high that you’ll stay motivated to provide accurate survey answers, also in the long run.

2. You’re dishonest when filling out your profile information

Dishonest respondents submit fake prescreening details to access the biggest volume of research studies, increasing their earnings potential. The impact of lying is often based on two aspects:

The significance of prescreening: In studies that compare both genders of respondents, if one or more members of either group aren’t what they claim to be, it could invalidate the study and render the data useless. However, if gender doesn’t play a role in the study’s design, whether the respondent has lied about their gender is irrelevant.

The uniqueness of your study: The presence of impostors is more frequent when recruiting target groups with low incidence rates in the population. This is because the demand for specific target groups can be very competitive, and the studies with broad eligibility can fill quickly. Therefore, if you wish to maximise your earnings, you’ll need to be able to access studies that fill up more slowly by making claims to be a member of one (or numerous) specific demographics.

How survey sites try to stop dishonest respondents

Here are some examples of measures taken by survey sites:

Never disclosing in the survey’s description or title what characteristics you’re looking for. This could give malingerers the data they require to swindle to qualify for the survey.

Re-asking prescreening questions in the first few minutes of the survey (and at the conclusion, in cases where it’s not overwhelming). This lets research companies confirm that your answers are current and relevant and can reveal lying participants who’ve forgotten their initial answers to the prescreening questions.

Re-asking questions from your screener questions that are hard to answer unless you’re fully honest. If the company require participants who take antidepressants, you could be asked about the name of the medication and the dose. Most of the time, it’d have the potential for a deceitful person to find the solution to the concerns… however, most dishonest respondents don’t have the time or energy to make the time and effort!

Our advice: Providing false personal details can be a good way to test out a new website, but not in the case of survey sites. If you’re caught filling out false profile details, chances are high you’re going to lose your incentives. Instead of filling in false information to protect your personal data, check for reviews on trusted sites such as HuginX before signing up for a new site.

3. You’re trying to cheat the system

The third group of bad data come from cheaters who intentionally submit false data to surveys. But remember that cheaters are not always attempting to deceive: some might be confused about the information in the survey or think they’ll receive a payment even if they fail to perform “well”.

It could be because you believe the study’s rewards will depend on your “performance” (i.e. you believe that you’ll only get paid if achieving 100% on the test, and therefore search for the right answers). Alternatively, you might think the company only wants a certain kind of response (i.e., always giving very positive/enthusiastic responses) or use aides (pen and paper) to perform much better than reality.

The last type of cheaters are people who don’t consider surveys a serious activity, or perhaps they are doing it with their buddies or when drunk. To clarify, dishonest respondents provide false details about their demographics to get access to paid opportunities. Cheating respondents offer false details within the questionnaire. An individual participant could be dishonest and a cheater, affecting data quality negatively.

It’s important to remember that these groups aren’t separate. A dishonest respondent may use bots; inattentive respondents could cheat etc. There’s likely plenty of overlap since most bad actors don’t care about what strategies they employ to earn money for as long as they’re maximising their profits!

Our advice: Don’t cheat. It’s simply not worth it and it’s immoral. Bad survey data can ultimately lead to companies going bust with people losing their jobs because of wrong business decisions.

How survey sites try to stop cheating respondents

- By recording time spent in questionnaires to keep participants from spending time searching for answers on Google.

- By asking participants a few queries to clarify the task’s directions after the exercise (to ensure they’ve had a clear understanding of the task and didn’t cheat unintentionally).

- By establishing precise criteria for screening data to identify unusual behaviour.

4. You’re using automation software

Automated bots are semi-autonomous software designed to take online surveys and complete them with minimal human involvement. Bots are typically identified by random or nonsensical, low-effort text responses. However, because they are not human, there are various ways survey sites identify bots in their data.

How survey sites try to stop automated bots from taking surveys

By including a captcha at the beginning of the questionnaire, automated bots can be prevented from submitting any answers. Also, if the research is extremely interactive (such as a cognitive or reaction time test), the bots will not be able to finish it effectively.

By including open-ended research questions (e.g., “What did you think of this research? ?”). Research companies examine their research data to find low-effort incoherent responses to these inquiries. Most bot answers are unclear, and they might see the same phrases utilised in multiple responses.

Checking their database for random answer patterns. Human responses will give consistent answers, while an automated system will likely give identical answers repeatedly.

Our advice: Survey companies have become pretty sophisticated when it comes to catching automated bots these days. The time and effort required to create a bot to cheat survey companies is better spent on more productive things.

Ways to avoid account suspension

1. Be truthful and attentive

First and foremost our advice is to be truthful and attentive when taking surveys. Keep in mind that data from online surveys is used to improve products and services, which will benefit everyone in the end. If companies make decisions based on “bad data”, they might make wrong investments, leading to wasted money on products that nobody wants. In the worst case, a company might go bust and employees might lose their jobs if they cannot recover from a wrong investment.

2. Read through the terms & conditions

Our second piece of advice is to carefully read through the terms & conditions when signing up. Just because you see rewards added to your account after taking a survey, does not mean you will get paid. Survey sites often have a lot of conditions in order to pay you any rewards of real value. And if you’re caught violating the terms, you’ll risk losing everything you have accumulated before redeeming.

3. Don’t use VPN

You might be tempted to use a VPN service when taking surveys as an extra layer to protect your privacy and data. This might sound like a great idea, but it’s not going to work. The reason for this is that most of the major survey sites block VPN traffic completely. The key reason is to avoid survey takers signing up for countries they don’t live in, such as someone from India signing up for US survey sites to earn more money.

4. Check reviews on HuginX

Our third piece of advice is to check reviews on HuginX to get an idea of the overall survey experience. We believe many respondents resort to inattentive behaviour when they realise how much work is actually involved in getting paid for taking surveys. After hours of survey-taking and still not getting paid, it’s not surprising that some respondents get fed up and resort to “bad behaviour”. On HuginX you can find paid side gigs which enable you to make the most out of your expertise and knowledge.

What to do if a survey site is cheating you

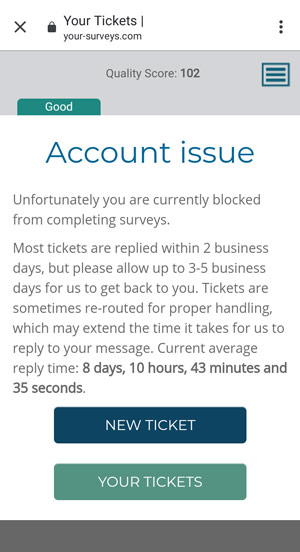

Many respondents experience being kicked out after finally accumulating enough points to redeem their reward. Right at the moment when they’re supposed to finally get paid their hard-earned cash. Why couldn’t the survey site have told you sooner? Why wait until after you’ve completed so many surveys to suspend your account? In this type of situation, it’s easy to think the survey site is trying to cheat you and suspect a scam.

The impact of false positive

Since a lot of the anti-fraud detection is done through automation software, it can happen that your account is flagged as fraudulent by mistake. This is called a false positive and means a human will need to review your account. If you suspect you’re a false positive, reach out to the survey site and ask for someone to review your case following our suggested escalation path below.

Is the survey company operating unethically?

On one side, you could argue that kicking you out right before you’re about to redeem is just a tactic for the company to save money. After all, the survey company might have already sold and invoiced your survey answers to their clients. Or has the company gone back to its clients warning them about bad data, and refunding them for invalid survey responses?

Herein lies the moral dilemma: Is it ethical for survey companies to take rewards away from respondents, but at the same time earn money on their survey responses?

Your rights as a survey taker

While there could be valid reasons to block your account and forfeit your points, survey companies also have obligations to treat their members fairly. Many survey companies are members of market research associations such as ESOMAR, MRS or IA, which are supposed to uphold high professional standards within the industry.

How you’re meant to be treated

Let’s take a look at the code of standards published by the Insights Association (IA) as an example. IA is the main market research association in North America featuring many of the largest global survey companies on their membership list.

From IA’s Code of Standards:

- Respect research subjects and their rights as specified by law and/or by this Code.

- Be transparent about the collection of personal data; only collect personal data with consent and ensure the confidentiality and security of such data.

- Act with high standards of integrity, professionalism, and transparency in all relationships and practices.

- Comply with all applicable laws and regulations, as well as applicable privacy policies and terms and conditions, that cover the use of research subjects’ data.

Further, in Section 1: Duty of Care it says that researchers must:

- Balance the interests of research subjects, research integrity, and business objectives with research subjects’ privacy and welfare being paramount.

- Be honest, transparent, fair, and straightforward in all interactions.

- Respect the rights and well-being of research subjects and make all reasonable efforts to ensure that research subjects are not harmed, disadvantaged, or harassed as a result of their participation in research

Source: IA Code of Standards

What does this mean for you as a respondent?

The market research industry association IA clearly states that survey takers should be treated with respect, transparency and integrity. If you feel your rewards have been unfairly forfeited and your account suspended without any other explanation other than “violating our terms”, we recommend following up on your case.

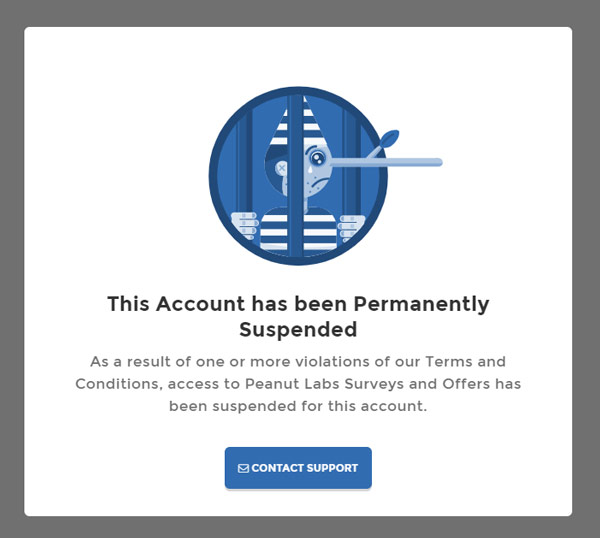

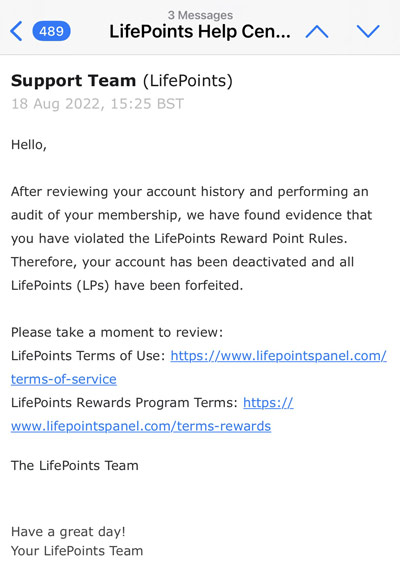

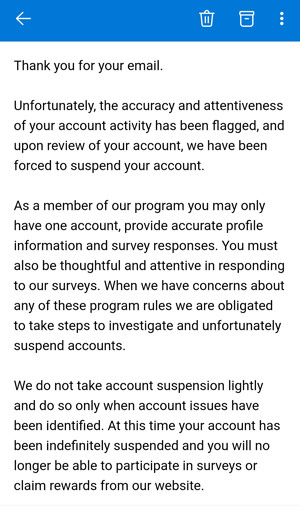

Below is an example of a generic email sent out by one of the larger survey sites. It informs the respondent that their account has been suspended and the rewards forfeited. No explanation is given besides referring to the violation of the site’s terms.

Example of survey suspension email:

Thank you for reaching out.

Kindly note that Surveysite.com is always vigilant in maintaining our community’s integrity. Unfortunately, during our regular reviews, we’ve noticed issues with your account that could negatively impact the integrity of our processes. Our system has detected that you were performing an activity that contradicts the information provided in your member profile or is not in line with our Terms.

As such, the panelist rights for this account have been suspended from our site and your earnings have been forfeited due to the violation of our Terms and Conditions. When participating in activities offered to you by Surveysite.com and its affiliated companies you agreed to supply only true, accurate, honest, current and complete (collectively “Accurate”) information. Unfortunately, based on our records, this requirement has not been met, and, subsequently, your membership has been suspended.”

If you have received a similar generic email, and feel like you’ve been treated unfairly without any “bad behaviour”, we suggest proceeding the following way:

7 ways to complain about survey account suspension

- Respondent support: Reach out to respondent support and make your case, as to why you think the account suspension was ungrounded. The initial response is often a generic template, so follow up and ask for specific details about your case.

- Ask for a supervisor: The respondent support person might not be authorized to make any decisions. If you don’t receive a satisfactory answer, ask for an internal escalation path to the supervisor in charge of the department.

- Parent company complaint: The survey site is usually operated by a parent company under a different name. On HuginX we always list the corporate entity responsible for the survey site, which makes it easier to escalate your complaint further. The person in charge of panels is often the COO – the Chief Operating Officer.

- Market Research Organisations: Make a complaint to Serene (or Self Regulation Engine). With over 60 national and international associations, Serene is a global community of members who voluntarily adhere to the ICC/ESOMAR International Code (and equivalent national codes). Participating members include many of the largest survey companies such as Dynata, Toluna, YouGov, Ipsos and Kantar.

- Social media: Most companies are present on social media, and they care about the reputation of their brand. If you’re not getting any response through the formal channels, this could be a way to get more attention around your case.

- Better Business Bureau (BBB): Submit your case to BBB. The BBB is a non-profit organization serving the US and Canada and assists in dispute resolutions between consumers and businesses.

- Consumer rights associations: Make a complaint to the relevant consumer rights organisation in your country. Here are some examples:

- Australian Competition and Consumer Commission – https://www.accc.gov.au

- Germany – Verbraucherschutz Zentrale – https://www.vzhh.de

- Northern Ireland – Consumerline – http://www.consumerline.org

- UK – Citizens Advice Guide – https://www.citizensadvice.org.uk/